Teachable NLP is a free service that you can easily train GPT-2 Model and make your own AI services. All you need to do is preparing your own text data. Teachable NLP will do the rest to train the AI model.

If you want to try the program right away, please check the link below!

Try the trained model (TabTab): https://kubecon-tabtab-ainize-team.endpoint.ainize.ai/

Teachable NLP : https://ainize.ai/teachable-nlp

Teachable NLP Tutorial : https://forum2.ainetwork.ai/t/teachable-nlp-how-to-use-teachable-nlp/65

In this case, I got the data from the “Kaggle” dataset within the “News Articles” category.

Step 1. Data preprocessing

The data preprocessing step is essential to improve model performance. This data contains unnecessary newlines, tags, and URLs, so it is necessary to remove them. The code also can be found here.

import pandas as pd

import numpy as np

import re

def cleaning(s):

s = str(s)

s = re.sub('\s\W',' ',s)

s = re.sub('\W,\s',' ',s)

s = re.sub("\d+", "", s)

s = re.sub('\s+',' ',s)

s = re.sub('[!@#$_]', '', s)

s = s.replace("co","")

s = s.replace("https","")

s = s.replace("[\w*"," ")

return s

df = pd.read_csv("Articles.csv", encoding="ISO-8859-1")

df = df.dropna()

text_data = open('Articles.txt', 'w')

for idx, item in df.iterrows():

article = cleaning(item["Article"])

text_data.write(article)

text_data.close()

Step 2. Model Training

Once the data preprocessing step is done, it’s time to train the model. Let’s train a model using the Transformers library that provides various NLP modules. In this case I trained the model using Google Colab and it took about 30 minutes. The detailed code can be found here.

from transformers import TextDataset, DataCollatorForLanguageModeling

from transformers import GPT2Tokenizer, GPT2LMHeadModel

from transformers import Trainer, TrainingArguments

def load_dataset(file_path, tokenizer, block_size = 128):

dataset = TextDataset(

tokenizer = tokenizer,

file_path = file_path,

block_size = block_size,

)

return dataset

def load_data_collator(tokenizer, mlm = False):

data_collator = DataCollatorForLanguageModeling(

tokenizer=tokenizer,

mlm=mlm,

)

return data_collator

def train(train_file_path,model_name,

output_dir,

overwrite_output_dir,

per_device_train_batch_size,

num_train_epochs,

save_steps):

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

train_dataset = load_dataset(train_file_path, tokenizer)

data_collator = load_data_collator(tokenizer)

tokenizer.save_pretrained(output_dir)

model = GPT2LMHeadModel.from_pretrained(model_name)

model.save_pretrained(output_dir)

training_args = TrainingArguments(

output_dir=output_dir,

overwrite_output_dir=overwrite_output_dir,

per_device_train_batch_size=per_device_train_batch_size,

num_train_epochs=num_train_epochs,

)

trainer = Trainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=train_dataset,

)

trainer.train()

trainer.save_model()

train_file_path = "/content/drive/MyDrive/Articles.txt"

model_name = 'gpt2'

output_dir = '/content/drive/MyDrive/result'

overwrite_output_dir = False

per_device_train_batch_size = 8

num_train_epochs = 5.0

save_steps = 500

train(

train_file_path=train_file_path,

model_name=model_name,

output_dir=output_dir,

overwrite_output_dir=overwrite_output_dir,

per_device_train_batch_size=per_device_train_batch_size,

num_train_epochs=num_train_epochs,

save_steps=save_steps

)

Step 3. Inference

Now, let’s proceed to the final step, which is the inference process. Enter the desired text and the length of the predicted value and you will get the result. Sometimes, if the amount of training data is small, models may say strange or unusual things. Code also can be found here.

from transformers import PreTrainedTokenizerFast, GPT2LMHeadModel, GPT2TokenizerFast, GPT2Tokenizer

def load_model(model_path):

model = GPT2LMHeadModel.from_pretrained(model_path)

return model

def load_tokenizer(tokenizer_path):

tokenizer = GPT2Tokenizer.from_pretrained(tokenizer_path)

return tokenizer

def generate_text(sequence, max_length):

model_path = "/content/drive/MyDrive/result"

model = load_model(model_path)

tokenizer = load_tokenizer(model_path)

ids = tokenizer.encode(f'{sequence}', return_tensors='pt')

final_outputs = model.generate(

ids,

do_sample=True,

max_length=max_length,

pad_token_id=model.config.eos_token_id,

top_k=50,

top_p=0.95,

)

print(tokenizer.decode(final_outputs[0], skip_special_tokens=True))

sequence = input()

max_len = int(input())

generate_text(sequence, max_len)

Teachable NLP

The following process may be a little more complicated or tedious because you have to write the code one by one, and it takes a long time if you don’t have a personal GPU (in my case, it took about 30 minutes to learn 5ep with 4.6MB of data). So this time, let’s use Ainize’s Teachable NLP. Teachable NLP provides an API to use the model so when data is input it will automatically learn quickly.

-

Upload data for training

Please upload the data (txt file only). -

Model type and epoch setting

Please set the model type and epoch. There are small, medium, and large model types, and the epoch can be set from 1 to 5. I set it to small, 5. -

Model training

Model is being trained. It took about 30 minutes in Colab, but about 20 minutes in Teachable NLP. -

Try the model right away (feat.TabTab)

Once the training is complete, let’s use the model right away through TabTab. TabTab is a program that analyses the input and automatically completes the next sentence only by pressing the Tab key. Click’Test your model’.

After entering the text, press the’Tab key’ or click’Run autocomplete’, the trained model will automatically complete the article according to the input.

The TabTab of the model I trained can be used here. You can use not only the model I trained , but also other models, so try it out. -

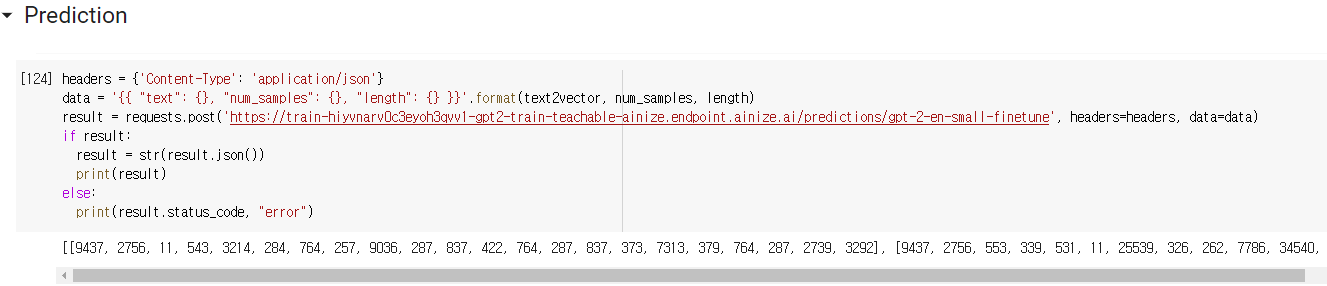

Using the API

When the learning is completed, it is immediately distributed as an API service through Ainize.

Let’s use the model. First of all, before entering text into the model, we need to make the text into a vector.

If you have made the text in the form of a vector as in the photo above, it is time to get the result as shown below. The result can be obtained in the form of a vector.

Now, finally, when we convert the resulting vector to text, we are all done.

Detailed code can be found here.

Currently, there is only a GPT-2 model available, but additional models will be added, so try fine-tuning the model easily with your own data!